I recently came across an old piece on The Atlantic on design research. Author and educator Jon Freach wrote, “Design can exist without ‘the research.’ But if we don't study the world, we don't always know how or what to create.”

His words resonated with me. Designers are innate problem solvers. Without “the research,” we wouldn’t know what problems to solve and for whom we create solutions.

One may argue that people generally don’t know what they want, and it’s up to us creating something new to spark desire. Yet, that creation process isn’t sheer magic being pulled out of thin air. The creation process usually involves painstaking investigation of the world and deep inquiry on how we can make it better.

In other words, the shiny new wonderful thing that everyone wants is just a reincarnation of a similar idea but thoroughly interrogated and researched.

Smartphones existed before the first iPhone was introduced. Group chat was invented long before Slack was a company. Similarly, databases are not a new topic. When I started several months ago, I wanted to do “the research” to figure out how this new database with a funny name could spark desire.

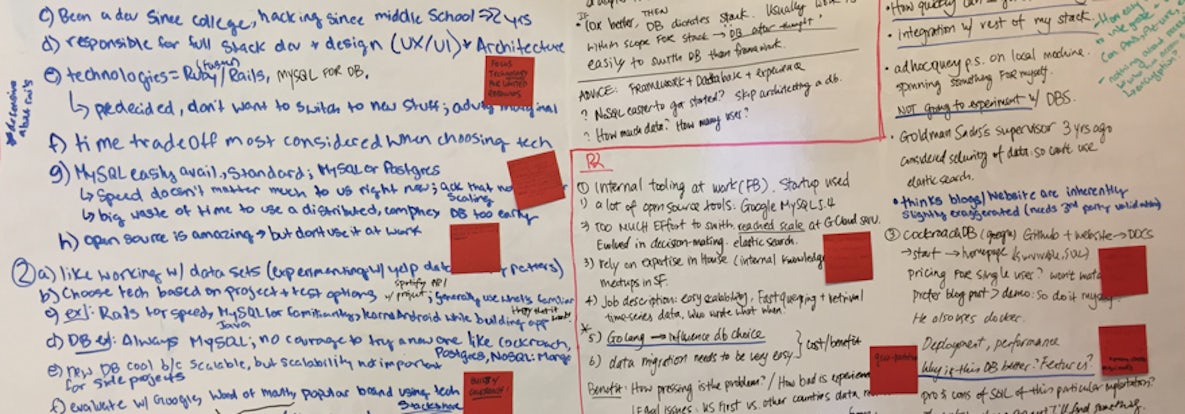

My first quest was to investigate pain points people have with their current database solutions, and how they first hear about, test and adopt alternative options. Having not been properly trained as a researcher, I hacked together a study with the help of GV’s extensive resources and recruited and interviewed a few developers and CTOs. Yet, the data I collected was scattered, with no clear pattern across the demographics.

Given how crucial the questions were to understanding of our audience, I decided to reevaluate the research process, and redo the study with some tweaks. Here’s what I’ve learned from doing the same study twice, and why the second study was a lot more effective.

Planning:

| Version 1 | Version 2 |

|---|---|

| I read up on available resources, wrote research objectives, and outlined questions. | I sat down with our marketing department (the major stakeholder for this project) first, and tasked them with setting objectives, key questions, and recruitment criteria for the study. |

| The interview guide was reviewed by the stakeholders, which was our marketing department. | After they took a first stab at the interview guide, I commented and asked questions to ensure the study was focused with a smooth flow. |

| I then wrote the recruitment criteria, and passed onto Marketing for review. |

For the second study, I experimented with letting the stakeholders set the goals. The result was delightful - not only did we save a lot of time up front, but everyone was also onboard with the research much earlier and was much more excited about the results.

In the planning process, we also discussed our hypotheses on why the first study produced scattered data. And our conclusion was that the interviewees were too different from each other, so we weren’t able to get enough data on one archetype of the audience. For the first study, I recruited consultants, developers, and c-level decision makers at companies of all sizes. For the second study, therefore, we decided to focus solely on developers who aren’t decision-makers at their companies or organizations.

Recruiting:

| Version 1 | Version 2 |

|---|---|

| I looked to interview both developers as well as C-level decision makers in enterprises for the study. | We only recruited developers who aren’t decision-makers at work. |

| Only Cockroach Labs swags were offered as incentives for participants’ time. | We offered cash incentives in addition to our swags to broaden the appeal of participating. |

| I emailed our existing user base and got roughly the same number of responses as we needed for the study. | In addition to emailing our user base, we leveraged our engineering team’s network to broadcast our recruitment message on Facebook, Twitter, and LinkedIn. |

As a result, we were able to get a bigger pool of potential participants the second time around, and handpicked the most fitting profiles to interview. Unlike the first study, our data points were far less scattered and therefore provided a clear pattern of how non-decisioning-making developers shop for databases.

Interviewing:

| Version 1 | Version 2 |

|---|---|

| I conducted all the interviews. | An additional researcher conducted all the interviews. |

| Half of the interview sessions were well attended by stakeholders and engineers. The rest were scheduled at inconvenient times for others to join and observe. | We booked all five sessions in one day during work hours, and all stakeholders were in the same room (at our own office!) so we had minimal setup time. |

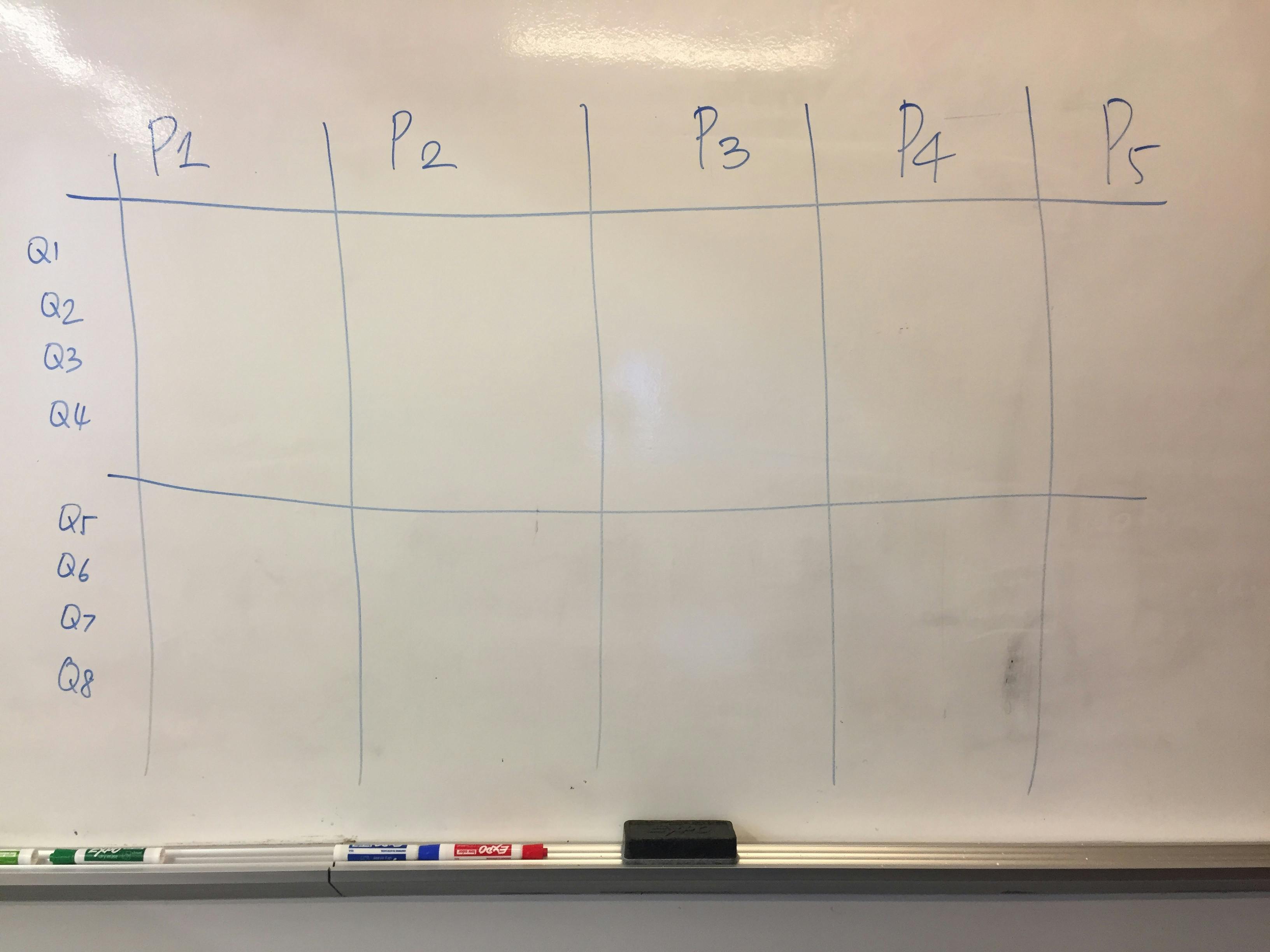

| We were part of a coworking space, which made it difficult to secure a single room for all the interviews. | We pre-drew grids on a whiteboard so we could easily identify patterns among participants. One person was responsible for noting on the whiteboard for each session. |

| I used video calls. | I used audio-only for all the interviews. |

For the second study, we were fortunate enough to have Michael Margolis, UX Research Partner at GV, conducting the interviews for us. His involvement freed us to attend more closely to the responses developers gave.

Scheduling all the interviews in one day and having the entire group in the same room were keys in making the interview phase successful. By clearing our schedules and dedicating a full day to research, we saved ourselves time and energy from context switching throughout the day. The pressure of having just one day to research also motivated us to perk up our ears and devour as much information as we could.

Having everyone in the same room allowed us to first-handedly hear the questions and pain points our developers had, and discuss the research results as they came in. Comments and ideas were bounced between departments and insights were immediately distributed across the board.

During the interview, two changes were proven to be quite useful. First, using audio instead of video decreased the awkwardness that was experienced in the first round of interviews. Though the idea of seeing faces was well intended, it made both the interviewer and the interviewee more self-conscious and guarded. Second, the whiteboard was an excellent, hands-on replacement for a collaborative Google doc. By the end of the day, our research results were visually highlighted and annotated in front of us.

Conclusion:

It’s perhaps obvious why the second study was more successful: we involved our stakeholders early, spent time and incentives to recruit and observed the sessions as a group. In the end, we collected clear patterns, actionable insights and most importantly, better questions to answer in the future.

And the fact that we ran the same study twice was a lesson of its own. Tracing our steps backward allowed us to identify opportunities for experiments and learn from mistakes. Though we’re far from perfecting our user research process, it’s the curiosity and rigor that motivates us to keep prototyping and learning about people who use our product.

To Freach’s point, that’s how we know what to create.

For more thoughts on design and building product, follow along as I ramble Back and Forth on Medium.